This is the fourth article in our series built around our AI in ABM Benchmarking Study. I touched on barriers in my first post after the B2B Marketing Global ABM Conference, but today I want to go deeper.

Our study shows that enthusiasm for adopting AI in ABM remains strong, yet frustration is growing. That’s my focus here: exploring the barriers ABM-ers encounter, and how some teams are finding ways to overcome them.

I’ll also look beyond our own research and compare what we found with broader research from LinkedIn, Deloitte and KPMG. And I’ll close with a view on how to address these challenges at the root, rather than patching individual symptoms.

What our benchmark reveals

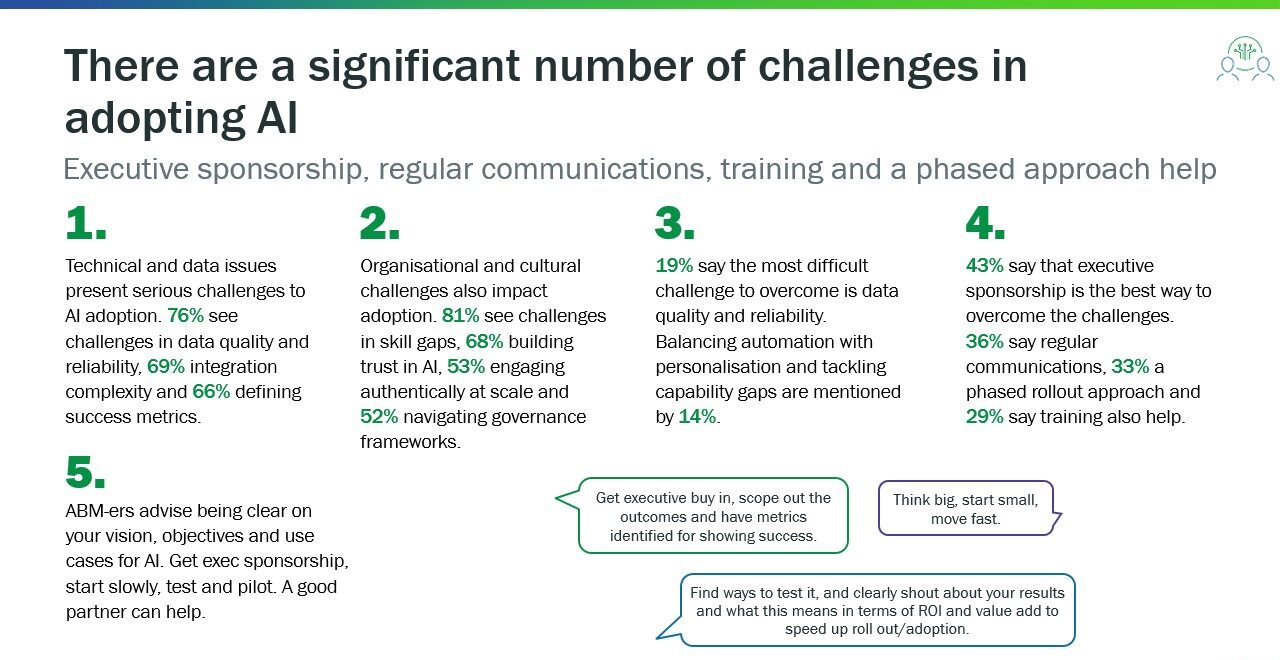

Source: Inflexion Group AI in ABM Benchmarking Study 2025

The chart above highlights the main types of barrier teams face when adopting AI in ABM. On closer inspection, these issues tend to reinforce one another. Whichever challenge teams start with – data quality, skills, trust or governance – they quickly end up encountering all four. They’re different expressions of the same system adjusting to the move from AI experimentation to it being part of everyday ABM practice.

With that in mind, let’s look at each category and what it reveals about how AI adoption is unfolding in ABM and the wider marketing landscape.

Taking a step back: what McKinsey’s 2025 State of AI tells us

McKinsey’s 2025 State of AI report makes a point that resonates strongly with our own data: meaningful value only appears when organisations change the structures around the technology. Their survey highlights workflow redesign, senior-level oversight and clearly defined adoption practices as the strongest predictors of impact – and notes that most organisations are still early in this shift1.

It’s a useful backdrop for our own findings. The barriers ABM teams report map directly onto this broader organisational picture.

Barrier 1: Data quality and integration

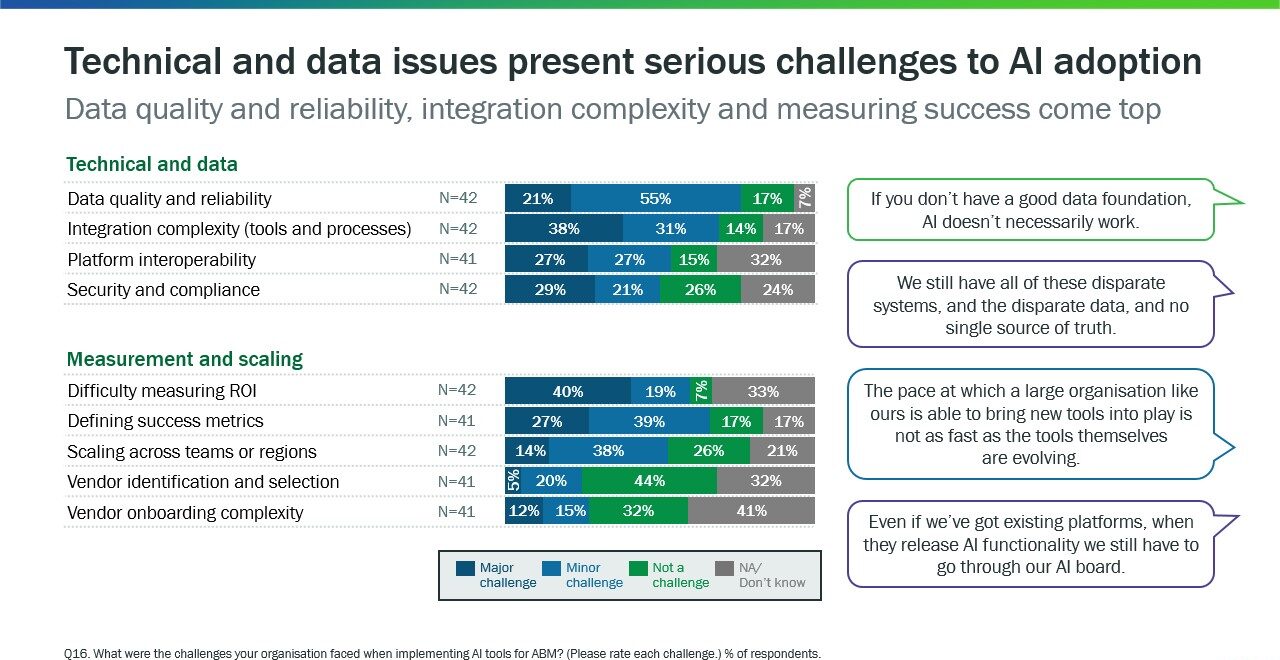

Source: Inflexion Group AI in ABM Benchmarking Study 2025

Source: Inflexion Group AI in ABM Benchmarking Study 2025

Data quality and reliability are the single most significant barriers in our study.

Seventy-six percent of ABM leaders report challenges with data quality; 69% cite integration complexity; and 66% struggle with defining success metrics.

This is aligns closely with broader enterprise research:

- KPMG’s 2024 GenAI Survey highlights data quality and privacy as two of the top AI risk mitigation priorities².

- McKinsey similarly notes that inaccuracy is the most commonly experienced GenAI risk, and is closely tied to data quality¹.

For ABM, the stakes are even higher. Poor data doesn’t just lead to weak recommendations; it undermines your credibility in front of senior stakeholders. AI amplifies whatever foundations you give it, good or bad.

Barrier 2: Skills and confidence

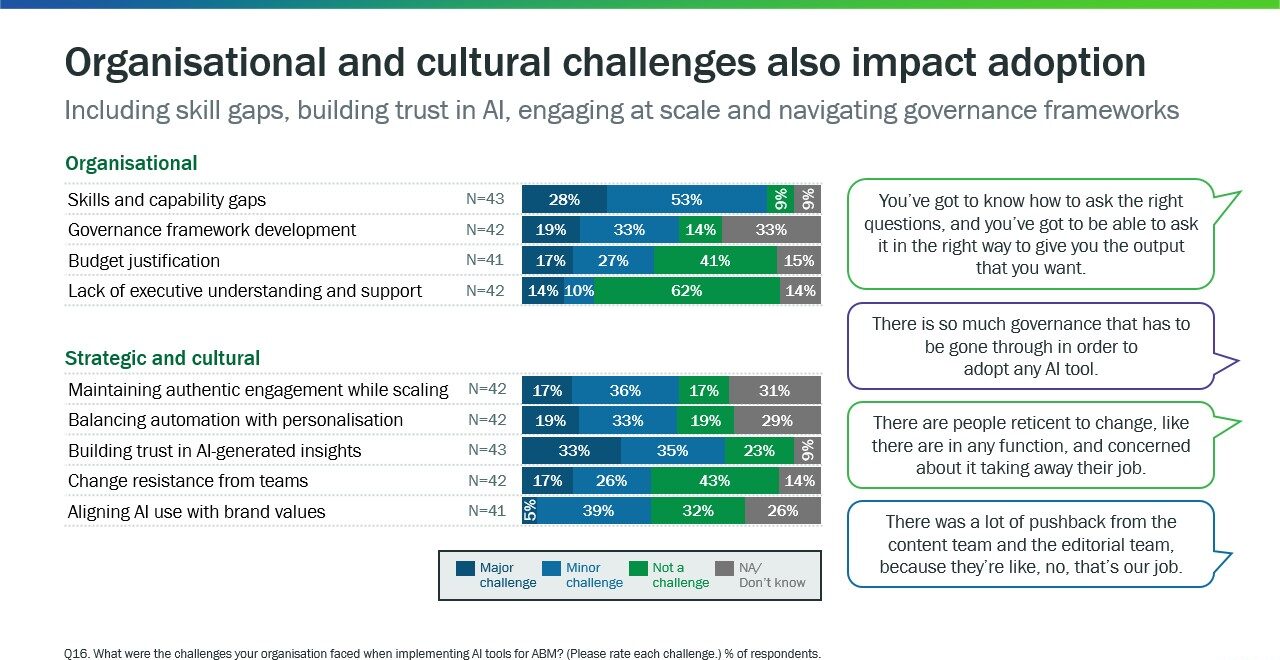

Source: Inflexion Group AI in ABM Benchmarking Study 2025

Eighty-one percent of respondents cite skill gaps. It is the most widespread challenge in the entire dataset.

LinkedIn’s 2024 B2B Marketing Benchmark found similar concerns, with many marketers citing insufficient AI skills and a lack of confidence in applying AI safely³. The pattern is consistent across major studies: most organisations experimented early without simultaneously building the underlying human capability to use AI responsibly and effectively.

In ABM, skill gaps – rightly – tend to produce a more cautious approach, particularly when AI is applied to strategic content, stakeholder communication or insight work.

Barrier 3: Trust, authenticity and the human voice

What the data points from the previous two findings – combined with the comments – tell us is that trust and authenticity sit at the heart of how teams judge whether AI is safe to use in ABM. Building trust in AI-generated outputs, and maintaining authentic engagement, are not abstract concerns; they sit close to the work that matters most. Teams want efficiency, but not at the expense of tone, relevance or credibility in front of senior stakeholders — which is hardly surprising when ABM teams focus on the 20% of customers that deliver 80% of profitable revenue.

The broader market echoes this:

- LinkedIn’s 2025 B2B Marketing Benchmark places trust at the centre of B2B marketing effectiveness⁴.

- Deloitte’s State of Generative AI research warns that over-automation risks eroding brand voice and credibility⁵.

For ABM, authenticity is non-negotiable. AI-generated content that feels generic or mechanised is not merely off-brand; it can damage senior relationships that have taken years to build.

Trust, in other words, is not just a principle. It’s a foundational requirement.

Barrier 4: Governance and organisational clarity

McKinsey’s 2025 study shows that CEO-level oversight of AI governance is the single factor most correlated with bottom-line impact¹. Our benchmark confirms this from a practitioner perspective. More than half of respondents say that navigating governance frameworks is a challenge, and the comments describe a familiar pattern: unclear rules, differing interpretations across functions and approval processes that slow the pace of experimentation. Several respondents noted that Marketing, Sales, Legal and IT often offer conflicting guidance on what is permitted, which naturally creates confusion, caution and delays.

Clear, operational governance accelerates adoption.

Ambiguous governance stalls it — even when the technology itself is ready.

From individual barriers through systemic root causes to solutions

Looking across both our own findings and the global research, the picture is consistent:

the barriers aren’t technical imperfections or AI-specific anomalies, instead they are symptoms of organisational systems that haven’t yet adapted.

Why the barriers persist: the underlying root causes

Before we jump into solutions, it’s worth calling out some underlying dynamics. When you map the patterns across skills, data, trust and governance — and compare them with the wider market signals — three systemic root causes emerge:

- AI has been introduced into workflows that weren’t built with it in mind

- AI exposes the strengths and weaknesses of the underlying data and workflows. As ever, garbage in produces garbage out — only faster.

- Governance remains ambiguous at the operational level

These root causes show up differently in each organisation, but the pattern is remarkably consistent. With that as the backdrop, we can look at what it takes to address each one at the source rather than managing symptoms.

Root Cause 1: AI has been introduced into workflows that weren’t built with it in mind

Across our study, and in broader research such as McKinsey’s 2025 findings¹, a clear pattern emerges: AI is being added to workflows that were never designed to accommodate it. McKinsey notes that redesigning workflows is the strongest predictor of AI’s bottom-line impact, yet the majority of organisations haven’t reached that stage. Our respondents describe the same friction in ABM, where AI often meets inherited processes that are already complex, cross-functional and time-sensitive.

“AI is great until the moment it hits our real process. Then you realise the process itself needs changing, not just the tools.”

What great looks like:

Teams step back before they automate. They revisit the workflow itself, remove unnecessary hand-offs, and build AI into the parts of the ABM process where it genuinely adds leverage — instead of treating it as an add-on to legacy steps. They also invest in role-based training and practical guidance so people know how to work with AI confidently within the redesigned workflow.

Root Cause 2: AI exposes the strengths and weaknesses of the underlying data and workflows. As ever, garbage in produces garbage out — only faster.

Data quality and integration challenges dominate our benchmark: 76% report issues with data quality, and 69% with integration. External studies reinforce this. KPMG highlights data quality as a top enterprise risk², and McKinsey points to inaccuracy as the most commonly experienced GenAI failure¹. In ABM, poor or inconsistent data feeds directly into account strategy, insight work and personalised engagement.

“If the data isn’t right, the AI just gives you wrong answers faster. And then you spend longer fixing them.”

What great looks like:

Teams establish a clear foundation before scaling. They define reliable sources of truth, simplify data flows, and align workflows so AI is drawing from coherent, well-governed inputs. It’s careful, deliberate work — and it pays off quickly.

Root Cause 3: Governance remains ambiguous at the operational level

More than half of respondents say governance is a challenge, and the comments paint a consistent picture: rules that are unclear, processes that vary by function and approval paths that slow experimentation. McKinsey’s 2025 research reinforces the importance of clarity here, noting that CEO-level oversight of AI governance is the single factor most correlated with value¹. In practice, however, organisations are still translating policy into something Marketing, Sales, IT and Legal can apply consistently.

“Depending on who you ask, the same AI task is fine, not allowed, or ‘under review’. It slows everything down.”

What great looks like:

Accessible, operational governance that tells teams exactly what is permitted, what requires review and where to go for answers. When governance becomes practical rather than abstract, it removes ambiguity and gives teams the confidence to use AI more effectively.

Shaping the system for the value we want

“All that you touch, you change. All that you change, changes you.” — Octavia E. Butler

The barriers we see in ABM aren’t unique. They mirror the foundational challenges organisations face as AI moves from ‘shiny new toy’ into day-to-day work. ABM teams simply feel the impact sooner – and sometimes more acutely – because the margin for error in high-value relationships is small.

The lesson in all of this? Progress isn’t something the tools deliver to us. It’s something we shape. The value we get from AI will be determined by how we work together across teams and functions, how we design the system around the tools, and how we hold ourselves to the standards of ethics and trust that ABM demands.

Further reading

These are the numbered sources referenced above.

- McKinsey — The State of AI: How organisations are rewiring to capture value (2025)

https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-2025 - KPMG — GenAI Survey (2024)

https://kpmg.com/sg/en/insights/ai-and-innovation/kpmg-gen-ai-survey-2024.html - LinkedIn — B2B Marketing Benchmark Report (2024)

(Section on AI adoption, skills and confidence)

https://business.linkedin.com/content/dam/me/business/en-us/amp/marketing-solutions/images/lms-state-of-b2b-marketing/insights/2024/pdfs/LinkedIn-B2B-Benchmark-2024-Report.pdf - LinkedIn — B2B Marketing Benchmark Report: Trust is the New KPI (2025)

https://business.linkedin.com/content/dam/business/marketing-solutions/global/en_US/site/pdf/wp/2025/2025-b2b-marketing-benchmark-trust-is-the-new-kpi.pdf - Deloitte — State of Generative AI in the Enterprise (2024–2025 Series)

https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/content/state-of-generative-ai-in-enterprise.html

ICYMI: The whole series on our benchmarking report to date